A Practical Guide to Fine-Tuning for Product Managers

Benefits, examples, case studies, and step-by-step instructions. Everything you need to know to easily apply fine-tuning in practice.

Hey, Paweł here. Welcome to the premium edition of The Product Compass Newsletter!

Every week, I share actionable insights and resources for PMs. Here’s what you might have recently missed:

Consider subscribing and upgrading your account for the full experience:

There are two critical areas AI Product Managers should understand: fine-tuning and RAG.

In today’s issue, we focus on fine-tuning - a machine learning technique that turns a pretrained LLM into your product expert.

We will learn:

What is Fine-Tuning and Why We Need It

Fine-Tuning Examples

Fine-Tuning Process

🔒 A Fine-Tuning Case Study With Step-by-Step Instructions

🔒 Conclusion

In this post, I demonstrate applying fine-tuning in practice so you can easily:

Repeat the process,

Practice without coding,

Or even create a solution for your portfolio.

1. What is Fine-Tuning and Why We Need It

Traditional off-the-shelf models are trained on vast amounts of general Internet knowledge.

As a result, to get the right outputs required by your product you would often need to:

Use a powerful pretrained model.

Include a lot of context and examples in every prompt.

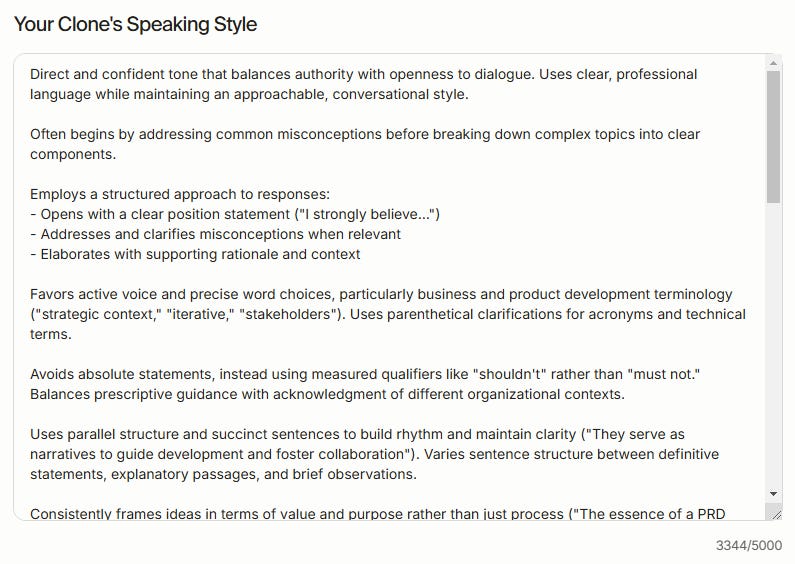

Just look at my Delphi clone instructions. They are attached to the prompt whenever the user asks a question:

I never understood why we waste so many tokens!

And even the most powerful models with the most detailed prompts often fail to generalize in narrow domains.

As a result, inference costs can increase dramatically while the quality of outputs and performance drop.

Here’s where fine-tuning comes in.

Fine-tuning allows you to use smaller, more cost-effective models that internalize your specific context directly in the model weights.

A fine-tuned model can provide more accurate answers without extensive instructions.

I’d argue that we should use fine-tuned models for most tasks your product executes frequently.

2. Fine-Tuning Examples

Typical fine-tuning applications include:

Chatbot Personalization: When we align our chatbot’s tone and responses with our brand’s unique style and vocabulary.

Domain-Specific Expertise: When we equip AI with in-depth knowledge specific to our industry or product, reducing dependence on retrieval-augmented generation (RAG) techniques. For example, fine-tuning helps companies like Luminance analyze legal documents while ensuring precise and reliable outputs.

User Behavior Analysis: When we use past customer interactions to predict user actions, such as identifying those at risk of churning or ready to be engaged by sales (Product Qualified Leads).

Content Classification: When we use a fine-tuned model to categorize documents, prioritize support issues, or determine customer intent.

Summarization, Sentiment Analysis, Language Translation (especially in narrow domains), and more.

3. Fine-Tuning Process

Think of fine-tuning as a process of turning a generic AI into an expert in your area.

The process described below works for:

Supervised Fine-Tuning: Each example in your dataset has a clear “right answer” (labeled data).

Unsupervised Fine-Tuning: The model learns by spotting patterns on its own.

Mixed Approach: A combination of supervised and unsupervised learning.

But for most products, supervised fine-tuning is the go-to approach. It gives you more predictable results, improves performance on specific tasks, and allows you to easily measure improvements.

It’s also the best-supported option among available tools, which I’ll demonstrate later in this post.

We’ll focus on supervised fine-tuning.

Step 1: Prepare The Data

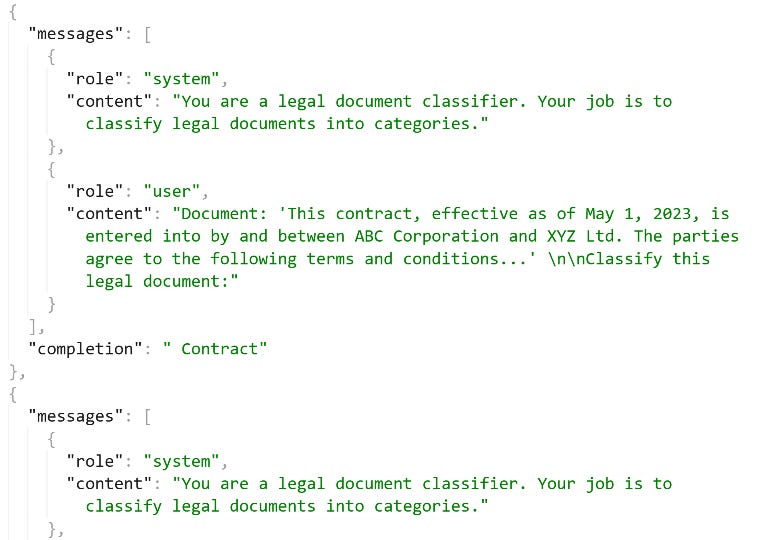

The first step is collecting examples that reflect your product’s tasks along with their expected answers. Training data is typically in the form of a .jsonl file.

For example, a legal document classification .jsonl file might look like this:

In practice, you need at least a few dozen to a few hundred examples.

The key factor here is data quality. It’s far more critical than quantity.

A good practice is to split the data into two groups:

Training data (80-90% of the entire dataset)

Testing data (10-20% of the entire dataset)

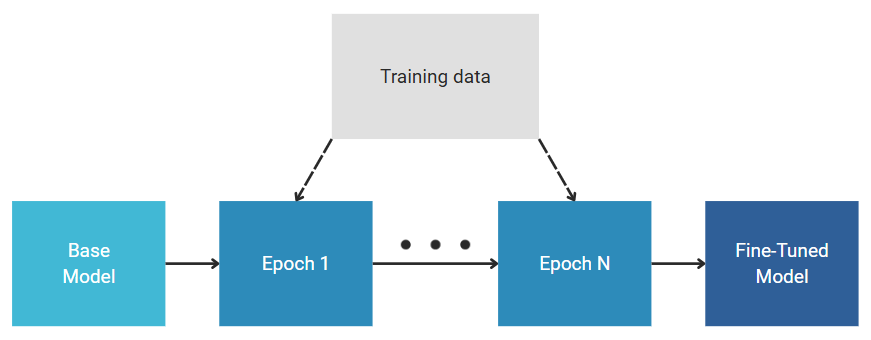

Step 2: Train the Model

Next, you “teach” the model on your training dataset. This is where weight parameters are updated.

Many solutions, like the OpenAI Platform UI, let you easily automate this process without coding.

Step 3: Test and Improve

Even though many solutions automate testing to some extent in a form of a “loss function,” I recommend testing the fine-tuned model separately (I demonstrate it later in this post).

The reported “loss function” results might not be conclusive. I learned to use them as an approximation of model quality.

Next, as with everything in product, we get feedback, iterate, and improve.

4. A Fine-Tuning Case Study With Step-by-Step Instructions

I’ve been looking for the right example and decided to fine-tune an LLM to predict how many upvotes a Reddit post in r/ProductManagement will receive based solely on its title and description.

(It’s similar to a document classification initiative I worked on commercially, but it didn’t require me to break my NDA, quite an advantage! Plus, I could easily get the data.)

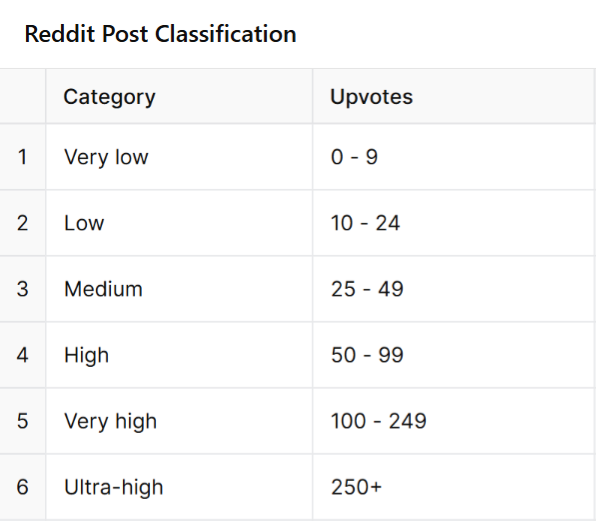

I defined the following post categories based on the number of upvotes:

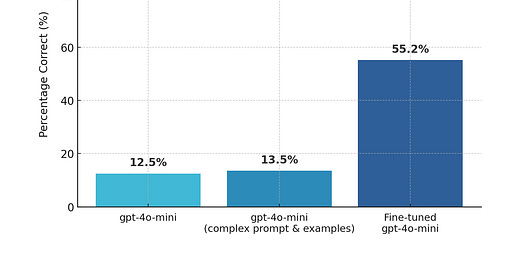

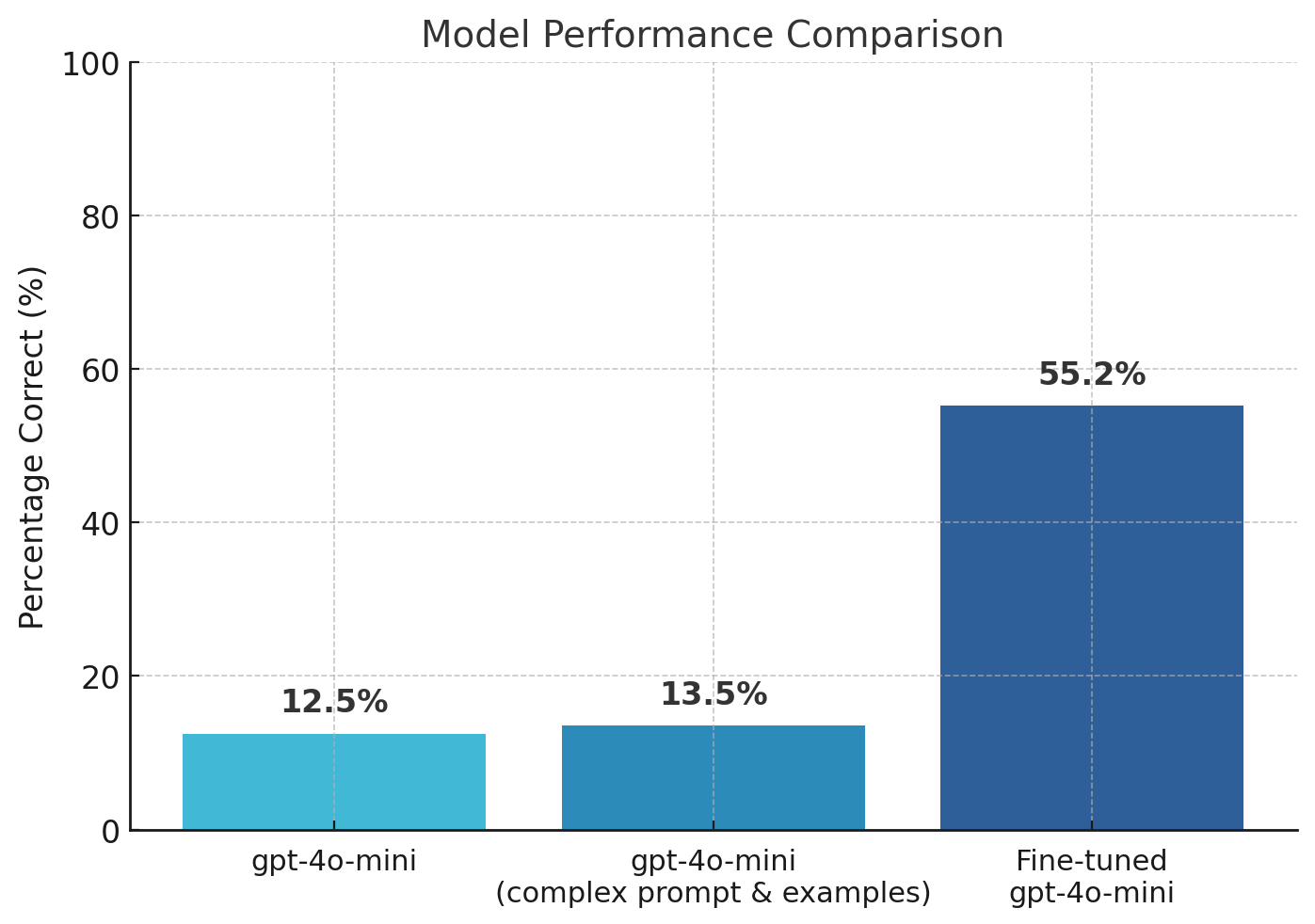

Then, I fine-tuned gpt-4o-mini-2024-07-18 on 861 posts and tested on 96 different posts. The results:

It worked better than expected.

Unlike pretrained models, in most cases, a fine-tuned model can accurately predict the number of upvotes a reddit post will get.

Here’s everything I did to prepare data, fine-tune, and test an LLM model without coding.

You can easily repeat this process.

Step 1: Prepare The Data

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.