Introduction to AI Product Management: Neural Networks, Transformers, and LLMs

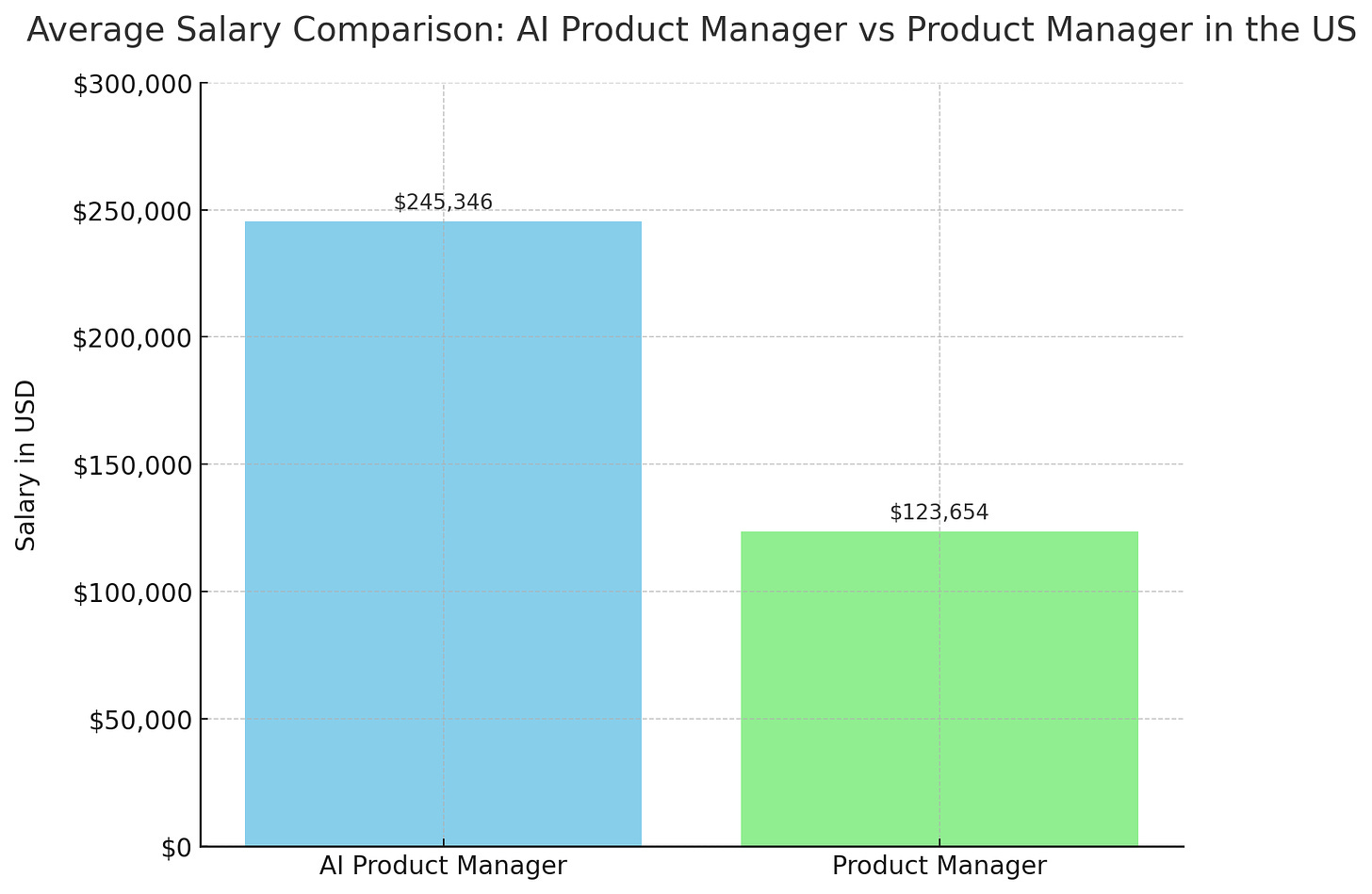

AI Product Manager in the US: $245,346. Product Manager: $123,654 per year.

Hey, Paweł here. Welcome to the premium edition of The Product Compass!

Every week, I share actionable tips and resources for PMs.

For more details, see the archive: https://res.productcompass.pm/the-best-of-the-product-compass-newsletter.

Consider subscribing and upgrading your account, if you haven’t already, for the full experience:

Understanding Artificial Intelligence (AI) is becoming an essential part of our job.

According to Glassdoor, the average salary for an AI Product Manager in the US is $245,346 per year, compared to $123,654 for a Product Manager.

On top of that, AI is quickly becoming a commodity, meaning you will need a basic understanding of it sooner rather than later in your career.

In this post, we explore the basic concepts every PM needs to be familiar with. We’ll discuss:

The AI Landscape and Key Terms

How Neural Networks Work

How Transformers and LLMs Work

Differences Between Transformers and the Human Brain

Conclusions

If enough people like this post, we’ll continue with topics like:

A framework for managing AI products: discovery, delivery, success metrics.

Your guide to fine-tuning: How to start, best practices, and common mistakes.

How to use AI to build products for your PM portfolio without coding.

AI agents and how you can leverage them with practical tools and examples.

Let’s dive in.

Before we proceed, I’d like to recommend the AI Product Management Certification. It’s a six-week cohort taught by instructors like Miqdad Jaffer (Product at OpenAI).

I participated in the cohort in Spring 2024. The next session starts on Nov 9, 2024.

Note: After publishing this post, I was able to secure a $250 discount for my audience if you use this link to sign up:

This post is not sponsored.

1. The AI Landscape and Key Terms

AI is a branch of computer science that enables machines to perform tasks that normally require human intelligence, such as seeing, understanding, and creating.

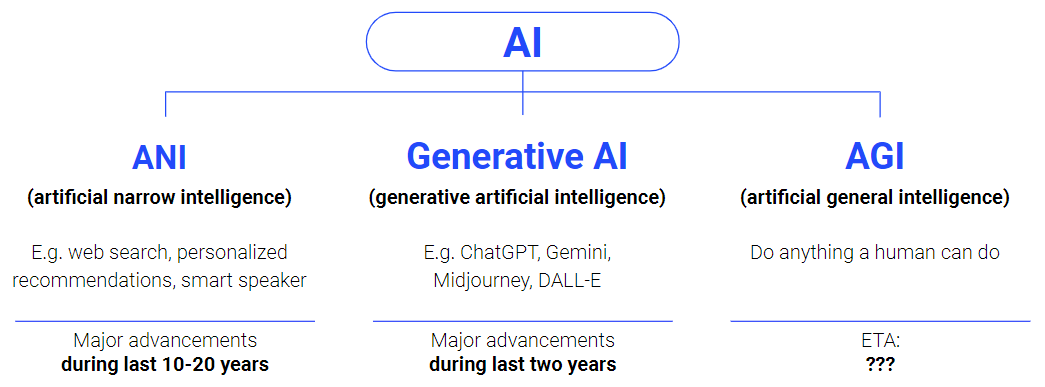

The most important type for us is Generative AI, which is a type of AI that can create new content based on the data it's been trained on. Instead of just analyzing or sorting existing data, these models generate new examples:

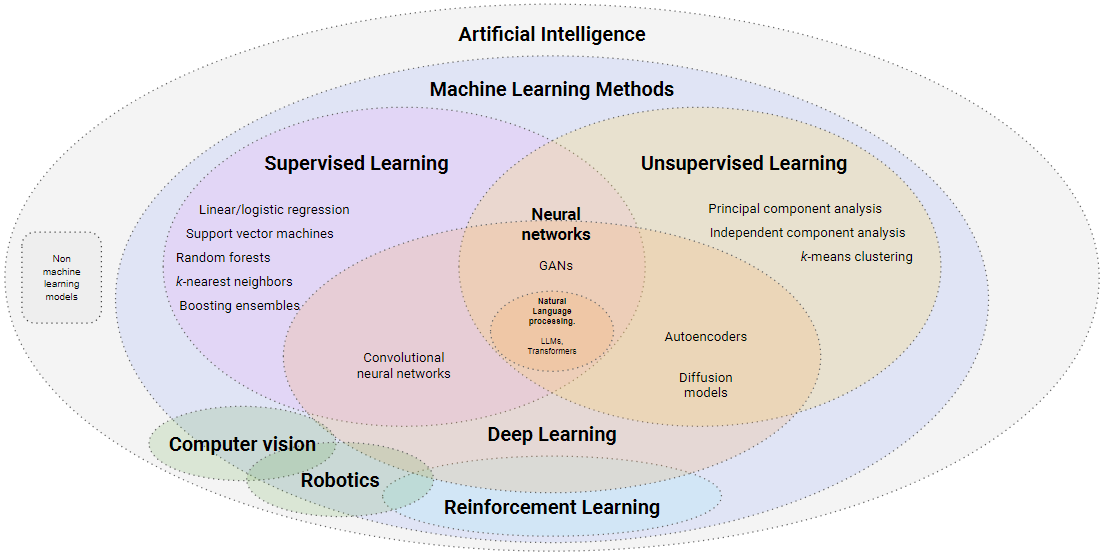

Let’s look at the AI landscape and the different machine learning methods that help Generative AI make sense of data and perform these tasks:

Here are four important types of machine learning every PM should know:

Supervised Learning: The model is trained on a labeled dataset, meaning each input comes with the correct output. That’s the basic approach to fine-tuning models you want to use to perform specific tasks in your product.

Unsupervised Learning: The model learns from data without labels. It has to find patterns and structures independently, like solving a puzzle without a picture.

Reinforcement Learning: The model learns by interacting with an environment. It makes decisions and receives feedback in the form of rewards or penalties. Over time, it aims to maximize the total reward. A great example of this was AlphaGo.

Deep Learning: This isn't a separate category but a subset of machine learning that uses neural networks with many layers (hence "deep"). These deep neural networks can learn complex patterns and are applied in supervised, unsupervised, and reinforcement learning.

In this series, we focus on Large Language Models (LLMs) and LLM-enabled products and features. They are pre-trained on large datasets and ready to be deployed across various domains with minimal additional training. They are also the easiest to integrate and cost-effective.

So, let’s zoom in:

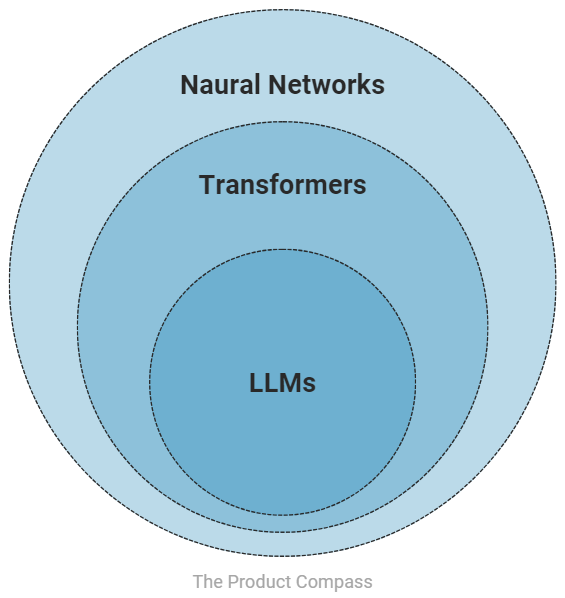

The key terms every Product Manager needs to know:

Neural Networks: Computational models inspired by the human brain consist of interconnected nodes (neurons) that process data.

Transformers: Neural network architecture designed for sequential data, transformers utilize self-attention mechanisms to weigh the importance of different parts of the input data

LLMs: Models built on transformer architecture, trained on extensive datasets to understand and generate human-like text. During training, they typically combine supervised and unsupervised learning with deep learning architecture.

Let’s explore them in detail.

2. How Neural Networks Work

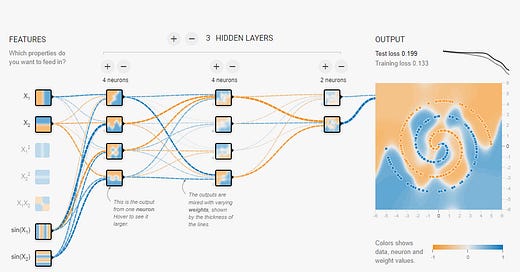

To grasp how neural networks work, you can experiment with the TensorFlow Playground: https://playground.tensorflow.org/. It's a free, interactive tool that allows you to see neural networks in action.

For example, in my recording below, you can see how the model learns to classify data points. The more features we probe and the more neurons we add to the network, the more complex patterns it can recognize:

How does it work?

You start by selecting a sample dataset, like circles or spirals.

The playground examines each data point, noting features like its position on the X and Y axes. Those features then feed the first layer of the network.

Each layer transforms the input data, but not every connection between neurons is equally important. We call this the “weights of a model.”

An activation function determines how each neuron responds to its inputs. The Tanh function I selected introduces non-linearity into the model.

The dataset is processed in batches during each training round (epoch), e.g., 10 data points at a time. The neural network computes predictions for classifying data points based on the model's current weights.

Next, a loss function evaluates the model's accuracy by comparing the predicted outputs to the actual data.

The weights are updated to reduce the overall loss through backpropagation.

You can see how well the model classifies data points on a scatter plot, with colors indicating different categories.

While powerful, traditional neural networks struggle with sequential data and long-range dependencies, such as in natural language processing.

For example, a similar approach might be used to categorize alphabet letters (OCR). However, this architecture would be insufficient to translate a paragraph of text from English to French.

How many features would you need to consider for an entire paragraph?

This is where transformers come into play.

3. How Transformers and LLMs Work

Transformers revolutionized AI by effectively handling sequential data like text and speech. Since the assumptions for other formats are similar, let's focus on text-based models.

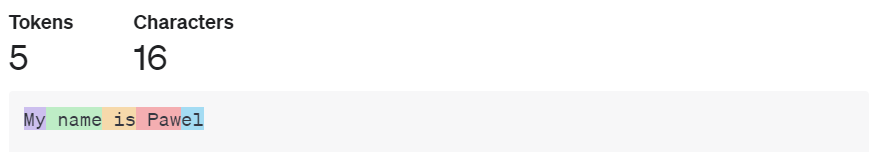

Let’s say we want to translate the sentence “My name is Pawel” from English to French.

Step 1: Tokenization

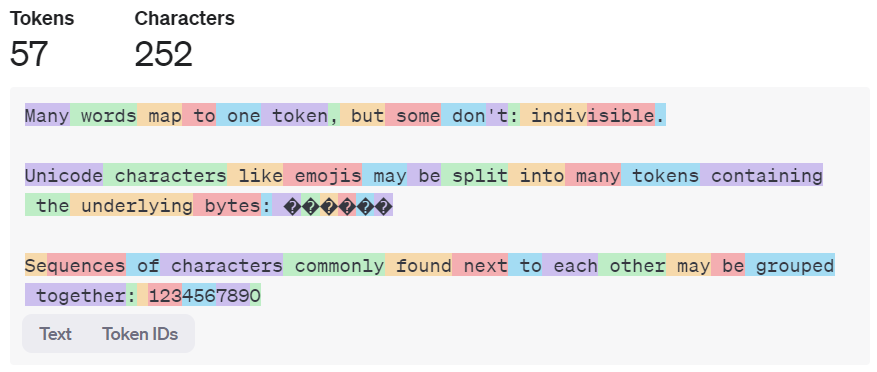

The first step is to split the input text into tokens. Many examples online suggest that each word is a token, but that’s an oversimplification. In practice, tokens can represent parts of words or even single characters, depending on the tokenizer. For example:

A more complex default example presented by OpenAI:

Step 2: Positional Encoding

Since transformers process all tokens simultaneously, they need a way to understand their order. This is achieved by encoding a position to each token:

#1 “My”

#2 “ name”

#3 “ is”

#4 “ Paw”

#5 “el”

This ensures that even though the model processes tokens in parallel, it knows which token came first, second, and so on.

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.