How to Build a RAG Chatbot Without Coding (AI PM Series)

Learn about RAG, develop a better AI product intuition, and build an AI-powered chatbot for your PM portfolio. A step-by-step guide with ready-to-use templates.

Hey, Paweł here. Welcome to the premium edition of the Product Compass Newsletter!

Every Saturday, I share actionable insights and resources for PMs. Here’s what you might have recently missed:

Consider subscribing and upgrading your account for the full experience:

RAG (retrieval-augmented generation) is a critical concept that every Product Manager working with AI must understand.

But it might be challenging to start without a CS degree.

After 2 weeks of research, I recommend the simplest no-code approach.

That might be the only tutorial to do it without coding or installing anything locally.

My goal is to help you:

Learn by doing rather than studying theory.

Develop a better intuition for managing AI products.

Build an app for your PM portfolio.

In today’s issue, we discuss:

What is RAG and Why We Need It

🔒 How to Build an AI-Powered RAG Chatbot Step-by-Step

1. What is RAG and Why We Need It

Let’s start with a demonstration of what we will build. Below is a chatbot that provides answers based on files in my Google Drive folder. I instructed it to refuse to answer if it can’t find relevant data.

The folder was initially empty. When I asked it about the North Star Metric, it couldn’t find any information:

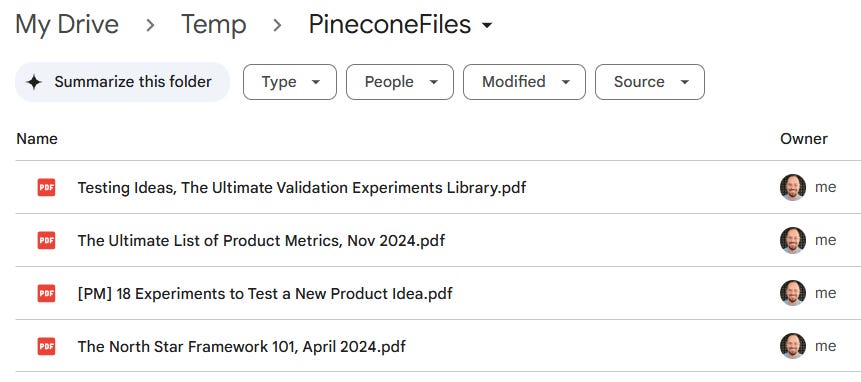

Next, I uploaded 8 files to my Google Drive, including North Star Framework 101, April 2024.pdf:

I waited 1 minute so that files could be automatically processed and stored in a vector database (I’ll explain that in a moment).

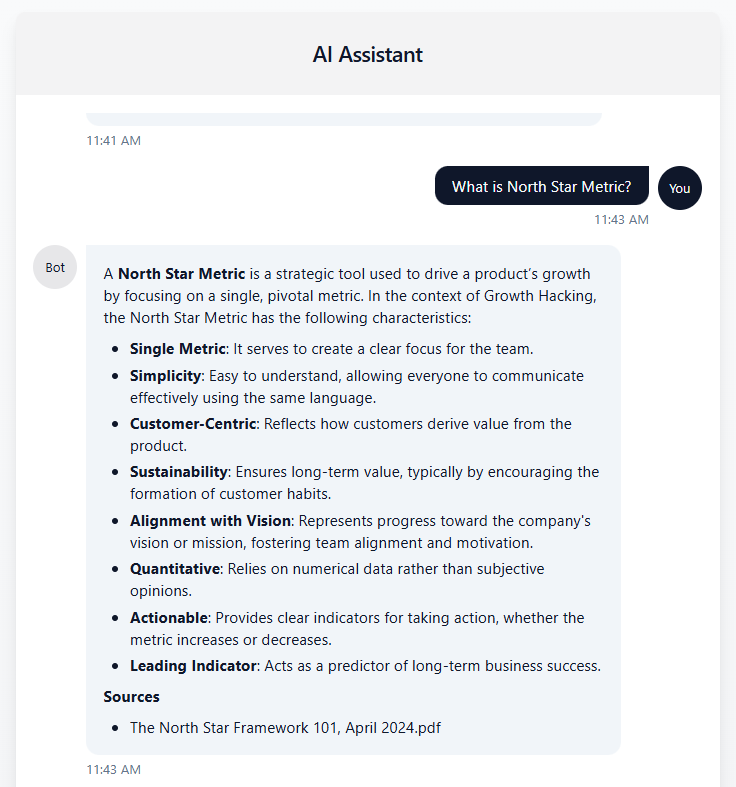

Then, I repeated the same question. This time, the chatbot provided the answer and referenced a specific file:

Video demonstration:

Why do we need RAG?

You might ask: “Paweł, can’t we just parse all those files and attach their contents to the prompt?”

Not necessarily.

When working in Ideals, my team dealt with millions of documents. But the amount of data you can send to an LLM (context window) is limited, for example:

ChatGPT-4o: 128,000 tokens (~words)

o3-mini: 200,000 tokens

And you pay for every input token.

So, while including the entire content might work for a single document or simple list, it’s often impossible for large amounts of data.

Here comes RAG.

How does RAG work?

Let’s discuss the most common, simple scenario.

When you use RAG, your data (e.g., documents) is not stored in the original format.

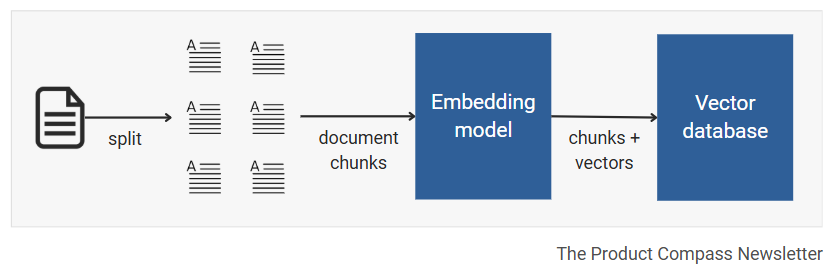

Instead, it’s split into chunks (e.g., 500-1000 characters each), which are then converted into multi-dimensional vectors and stored in a vector database:

We can use those vectors to measure the similarity of text strings, e.g., when performing search, clustering similar data, detecting anomalies, or labeling data.

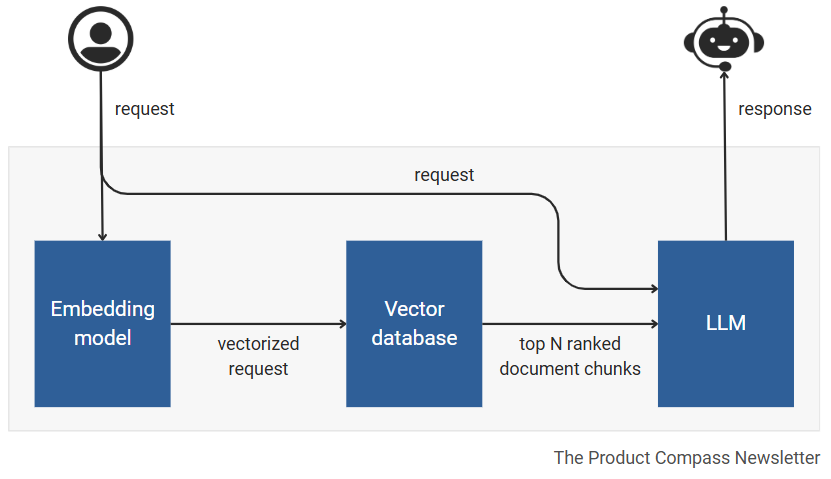

Now, when the user asks a question, the question is also converted into a vector and used to retrieve the most similar document chunks.

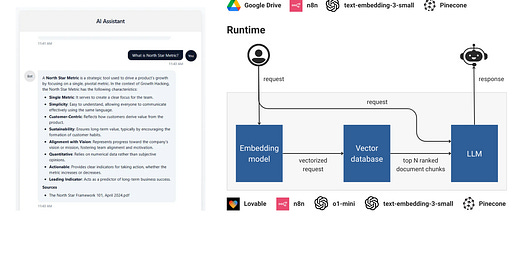

Finally, an LLM uses retrieved chunks and the original request to generate an answer:

In the next point, I demonstrate all those steps in practice.

Info: We’ll explore other RAG variants in a separate infographic/post.

2. How to Build an AI-Powered RAG Chatbot Step-by-Step

In this section, I present everything you need to create a functional chatbot using LLM and RAG. I also include ready-to-use workflow templates.

The entire process can take between 15 and 45 minutes and doesn’t require coding or installing anything locally.

I encourage you to repeat it, so you can:

Learn about RAG by doing rather than studying theory.

Develop a better intuition for managing AI products.

Build an AI-powered RAG chatbot for your Product Manager portfolio.

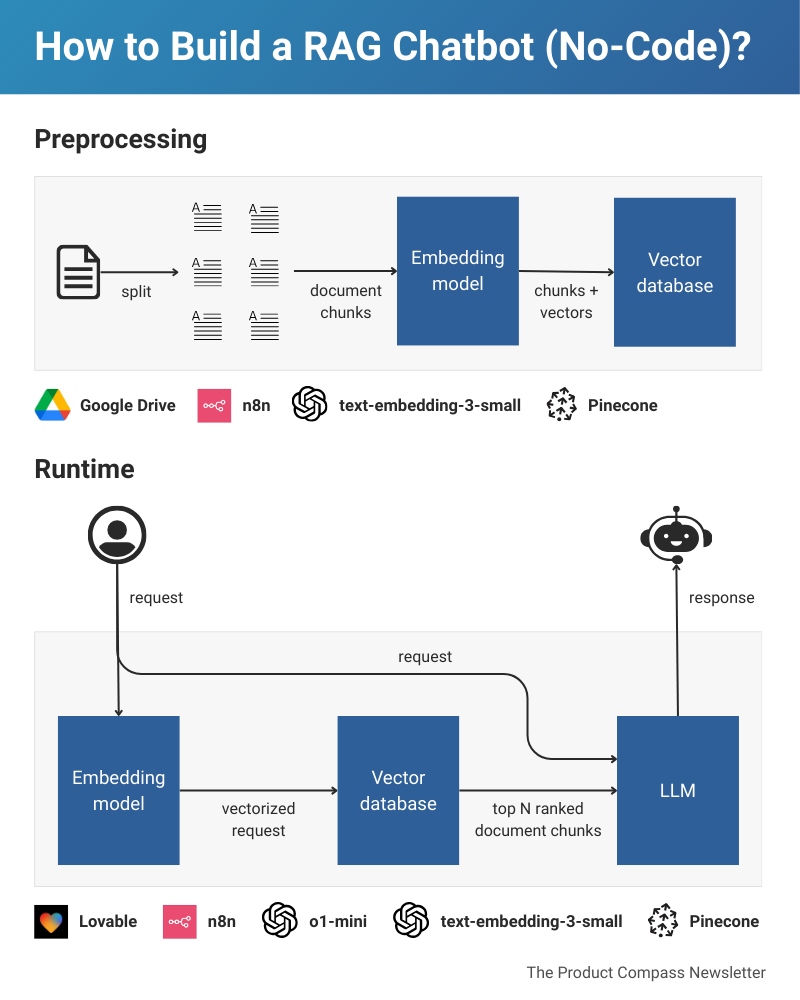

Our tech stack:

UI: Lovable (free version)

Orchestration: n8n (free trial)

LLM: Use GPT-4o-mini by OpenAI (less than $2 for hundreds of requests)

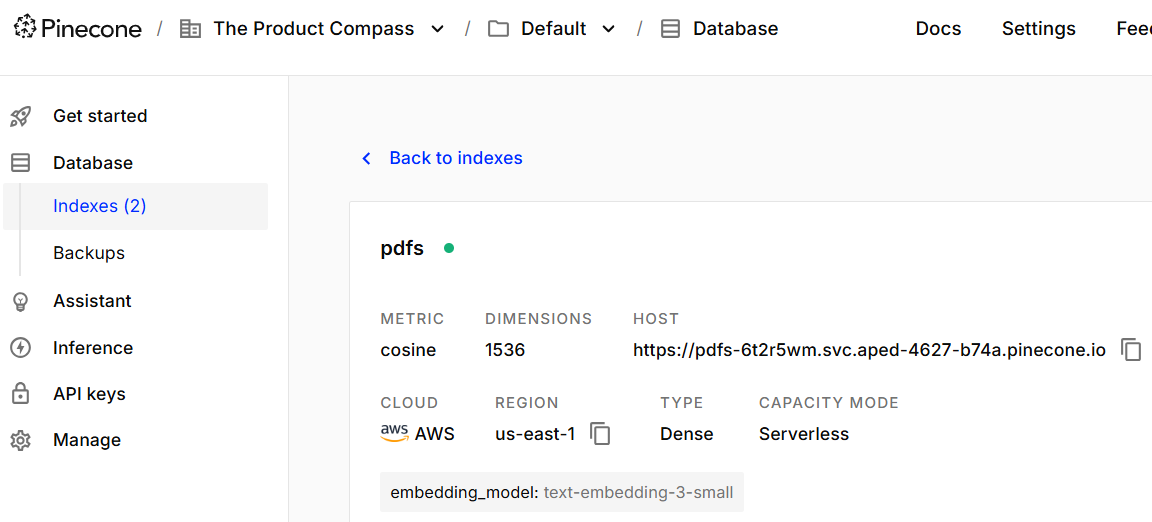

Embedding model: text-embedding-3-small (part of OpenAI and Pinecone)

Vector database: Pinecone (free tier, Starter)

Documents store: Google Drive

[Edited] Love to see comments like this one. Many of you have already repeated the process: 💪

Step 1: Create a vector database

Here’s what you need to do:

Go to https://app.pinecone.io/ and create a new account.

Create a new organization, e.g., “The Product Compass.”

Create a new index (e.g., “pdfs”) and use “text-embedding-3-small” by OpenAI. Other models might not be supported by your orchestration layer.

Go to “API keys” and generate a new key to access your vector database, copy the value, for example “pcsk_**********”.

Demonstration:

Step 2: Create an n8n workflow to store new Google Drive documents in your vector database (+template)

Keep reading with a 7-day free trial

Subscribe to The Product Compass to keep reading this post and get 7 days of free access to the full post archives.